AI-generated threats are getting more sophisticated

Phishing no longer looks like a sketchy Gmail address and poor grammar. Now, it looks like your CEO asking for an urgent invoice. Or a fake voicemail from a board member. Or even a deepfake Teams call.

And it’s not just hypothetical. In 2024, a finance worker was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company’s chief financial officer in a video conference call.

AI-generated attacks are up 4,000% since 2022, and they’re only getting more sophisticated.

Attackers are now using AI to analyse social media, emails, and online behaviour to create hyper-personalised phishing messages. These scams mimic writing styles, reference recent activity, and even continue legitimate email threads to avoid suspicion. Deepfake audio and video tools are being used to convincingly impersonate executives and board members, leading to large-scale financial fraud and data leaks.

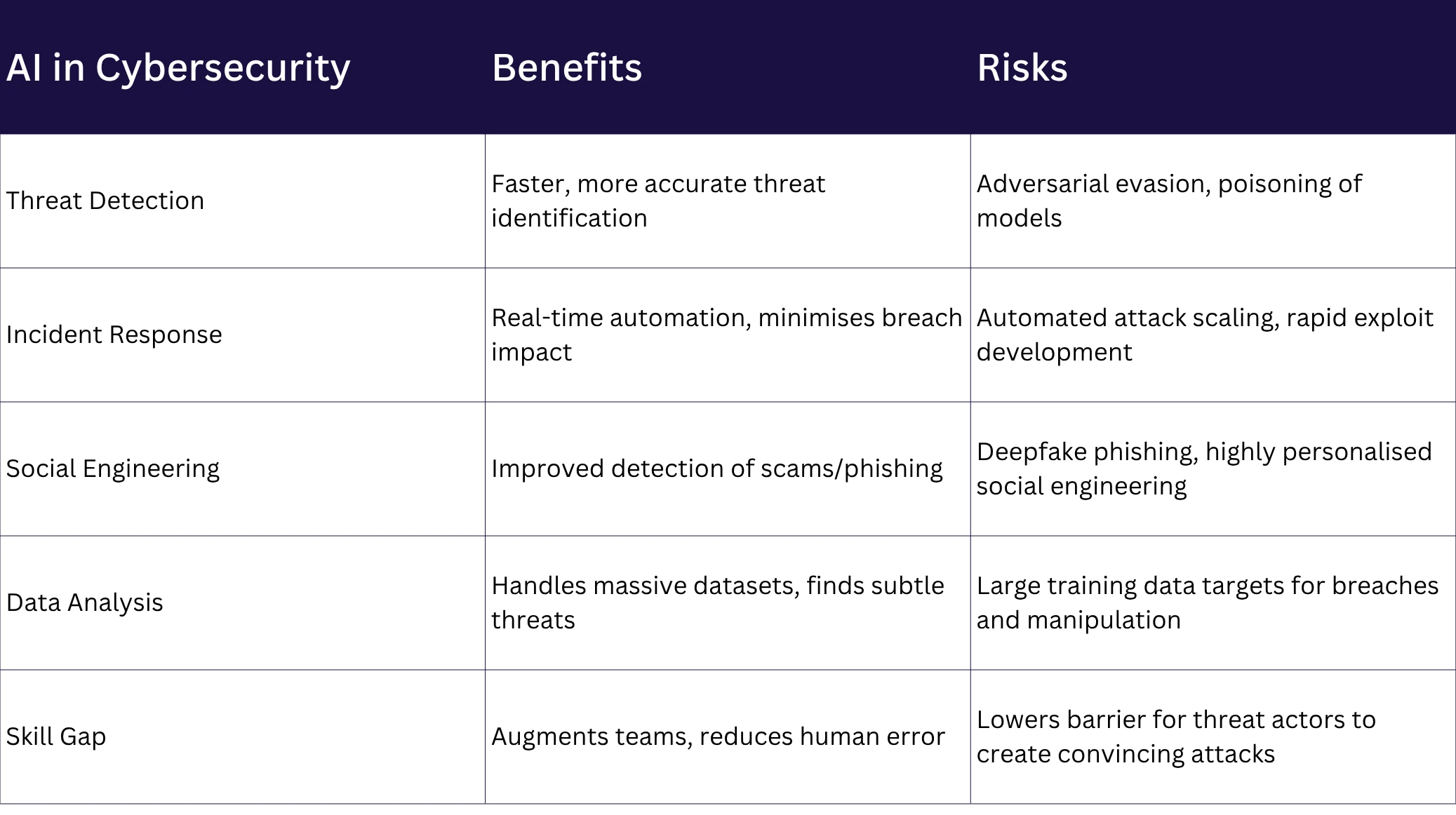

Static security tools can’t keep up. AI-driven phishing campaigns continuously evolve to bypass traditional defences by exploiting psychological triggers, perfect grammar, and near-flawless style. Meanwhile, self-learning malware is adapting in real time, hiding in plain sight by mimicking normal system activity.

In addition, the rise of Cybercrime-as-a-Service means even low-skilled attackers now have access to powerful AI tools on the dark web. These groups run automated A/B tests to fine-tune phishing content for maximum engagement.

Large Language Models (LLMs) and open-source AI tools are giving threat actors the ability to:

- Write malicious code in real time

- Tailor attacks using scraped personal info

- Learn how your org operates

That’s what makes AI-generated threats so dangerous. They don’t feel like attacks. They feel like business as usual.

How confident are you that your team would spot these attacks today?